Visible and understandable

In general, Katrin Beyer’s experience of freely accessible data has been very positive: “Our research is made more visible, cited more frequently and has a bigger impact.” Florian Altermatt also considers higher visibility to be an advantage. “According to our records, our datasets are accessed between 20 and 80 times.”

Both of them appreciate the fact that open data lends their research greater credibility. In the words of Florian Altermatt: “Other researchers are able to follow my measurements and examine the results. So I don’t need to worry.”

Open data, of course

Researchers who receive money from the SNSF now also need to make their research data available. How this requirement can be met is exemplified by Katrin Beyer and Florian Altermatt. They have been part of an open data culture for years.

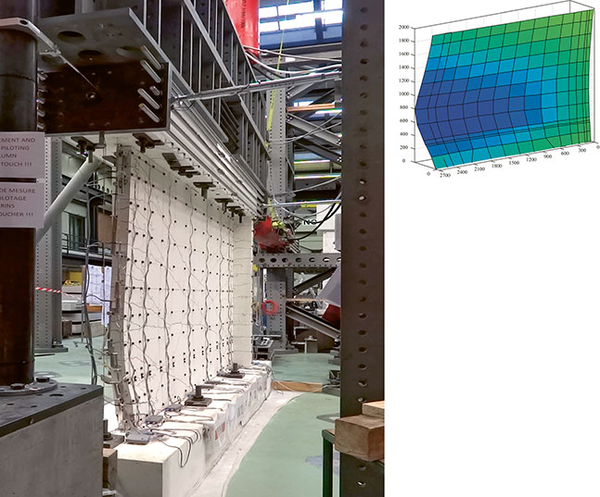

Can the reinforced concrete withstand the displacement forces that pressure it? In a large laboratory at EPF Lausanne, Katrin Beyer and her team are investigating how the walls of buildings get warped during earthquakes. Each series of experiments generates several hundred gigabytes of data: photographs, videos, measurement data, reports.

Progress accelerated

Since starting the experiments in 2010, Katrin Beyer, professor for earthquake engineering, has published a multitude of such data. “In our field, we collaborate closely with other universities. Therefore it makes sense to allow open access to the data, particularly if they were generated in costly and time-consuming experiments.” Working together, research teams will be able to improve earthquake protection more rapidly.

And for Beyer, there is a another reason why publishing the data seems the right thing to do: “Our research is funded by the tax payer. So the data belong to the public.”

Data management from day one of the project

Her view is shared by Florian Altermatt, SNSF professor for community ecology at the University of Zurich and team leader at the Swiss federal research facility Eawag in Dübendorf. Altermatt already started storing his research data in public archives ten years ago. His research interests include biodiversity patterns in rivers and measuring such biodiversity based on environmental DNA (eDNA).

His team are under clear instructions: from day one of the project, all research data must be continually managed and edited. This ensures that all members of the team have access to them – even ten years after the project, when the undergraduate or doctoral student is no longer around. “Publishing the existing data is the logical next step; it doesn’t cost much and can be done in next to no time,” says Florian Altermatt.

Katrin Beyer’s team also edit the data so that they can be used by internal researchers who were not involved in the experiment. Thanks to this systematic data management, publishing is easy and cheap. According to Beyer, it probably doesn’t account for more than 1% of the project costs. “And we also have the benefit of an external backup.”

Protecting young researchers

And the limits of openness? Neither Beyer nor Altermatt use data that are considered sensitive for legal or ethical reasons. For such data, the open access commitment does not apply. For Altermatt it is vital that young researchers only make their data available, once they have published their master thesis or dissertation. “Otherwise someone else could overtake my team member and, if worst comes to worst, damage their career.” He also acknowledges the risk of suboptimal data analyses by third parties resulting in claims that are not backed up by the data.

Katrin Beyer mentions the problem of ever-increasing amounts of data. “We are currently making high-resolution images of the concrete walls; this generates several terabytes with each series of experiments. It is not possible to store so much data in the archives we have used until now.” Databases with a larger memory are therefore needed.

But after all is said and done, both researchers still firmly support an open data culture. They are in no doubt that it is an integral part of science today. This is precisely what the SNSF hopes to achieve with the new requirement.